- Policy Analysis

- PolicyWatch 3270

The Search for Extremism: Deploying the Redirect Method

Results from a new terrorism prevention project in the United States show how targeted online advertising tools can be used to undermine white supremacism and Islamist-inspired extremism.

On January 15, Ryan Greer and Vidhya Ramalingam addressed a Washington Institute roundtable on countering violent extremism. Greer, the director for program assessment and strategy at the Anti-Defamation League, has consulted or served with numerous organizations on CVE issues, including the Department of Homeland Security, the Pentagon, the State Department, and the Global Community Engagement and Resilience Fund. Ramalingam is the founder of Moonshot CVE, a company that uses technology to disrupt violent extremism globally. Previously, she led the EU’s first intergovernmental project on far-right terrorism, initiated by the governments of Denmark, Finland, the Netherlands, Norway, and Sweden. This PolicyWatch is based on discussions held at the roundtable.

After being found plotting domestic terrorism, Christopher Hasson—sentenced to thirteen years in prison—was revealed to have Google searched threatening queries such as “how can white people rise against the jews.” Dylann Roof, the perpetrator of the 2015 Charleston church massacre, may have searched for information on “black on white crime” and found radicalizing materials. That such online radicalization will continue is certain, particularly given that preventive methods to “off-ramp” potential extremists before they cross the line into criminality have been unevenly implemented, and typically at an infinitesimally small scale compared to strategies aiming to disrupt and arrest, such as counterterrorism.

To address this gap, Moonshot CVE and the Anti-Defamation League (ADL) recently ran a joint program to address search-based online extremism in the United States. They sought to reduce the likelihood that online extremist propaganda would be consumed or legitimized. Beyond that, they aimed to create a pilot project and produce data that not only offer insights into the trends and themes of extremist propaganda in the United States, but also help inform best practices in undermining that propaganda.

BEHIND THE PROJECT

Last year, ADL and Moonshot worked in partnership with the Gen Next Foundation to deploy a program known as the Redirect Method. They placed advertisements on Google redirecting users to videos that undermine extremist narratives, targeting individuals searching for terms that indicated an intent to consume extremist propaganda or a sympathy toward extremism more broadly. The program was deployed in the United States as a pilot project to explore how private sector tools can counter online extremism in a way that allows researchers to measure results and tailor approaches to what works.

The project ran ads targeting interest in both Islamist-inspired extremist propaganda and white supremacist extremism. The latter category was chosen as an area of focus for several reasons: the trends in this threat within the United States, the gap in domestic programming on online white supremacism, and the opportunity presented by ADL’s longstanding expertise on the topic. ADL research indicates that domestic extremists killed at least fifty people in 2018, a sharp increase from the thirty-seven killed in 2017, making it the fourth-deadliest year since 1970. In all, 98% of those killings were at the hands of right-wing extremists, and 78% specifically at the hands of white supremacists.

The Redirect Method—designed by Moonshot in partnership with Google’s Jigsaw—focuses on Google searches because they provide a unique window into the true thoughts and feelings of the at-risk audience. While users post on performative platforms (e.g., Facebook, Twitter) conscious that their activity will frame how others perceive them, non-performative sources like search traffic data show what information and content users are seeking online, rather than what they are posting as a social performance. As search traffic is private—and never edited, removed, or banned—it can serve as a consistent indicator of appetite for violent content and help discern the preferences of its consumers.

The researchers felt comfortable using ads because commercial advertisers are afforded great content control and a range of metrics that can be valuable in assessing reach and impact. The program reached users whose searches indicated interest in or a desire to join a violent movement, commit an extremist act, or consume violent extremist propaganda. Keywords indicating mere curiosity toward or research into violent extremism were not targeted. For example, a search for the white supremacist propaganda “The Turner Diaries” would not trigger a program advertisement, while a search for “Turner Diaries PDF”—indicating a clear intent to consume the content—would trigger one. In all, the program used Google searches for 17,134 keywords related to white supremacism and 85,327 keywords related to Islamist-inspired extremism.

The redirect advertisements appeared just above the organic search results and, as such, aimed not to censor those results but merely to offer an alternative. When users clicked on the advertisement, they were taken to a range of videos intended to undermine the messages in the content that would otherwise be consumed. Videos included messages from former extremists who have left the movement and other “credible messengers.” Though the content of the videos may not assuredly dissuade anyone from joining an extremist movement, it is a promising way to increase the likelihood that individuals consume safe alternative content rather than violent extremist content.

RESULTS

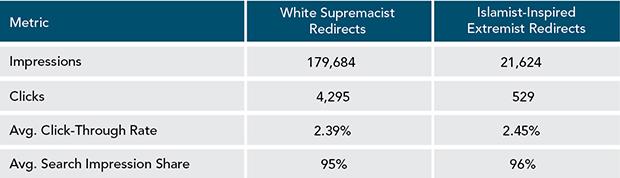

The program was run in all 3,142 counties and county-equivalents in the United States between May and November 2019. Overall, program advertisements received over 4,000 clicks redirecting to alternative content for the white supremacism deployment, and over 500 clicks for the Islamist-inspired extremism deployment. The deployment also resulted in over 200,000 impressions (the number of times the ad was shown to at-risk individuals), a significant number of clicks on the ads, and a high click-through rate (the percentage of clicks per impressions) and search impression share (the percentage of time an ad was shown every time an eligible user searched for an at-risk keyword).

In all, those searching for white supremacist extremist content consumed 5,509 minutes of video undermining those messages, and those searching for Islamist-inspired extremist content consumed 534 minutes of safer content. To be clear, the researchers do not know the final disposition of the viewers. Were they “hate watching” the content? Did it inspire them to shift away from hate and violence, or did it cause them to second-guess extremist propaganda only briefly? As of now, the answers to those impact questions are unknown, but the researchers can say that the at-risk audience consumed over 6,000 minutes of alternative content—time that could have been spent consuming violent extremist content instead.

The aftermath of the tragic shooting in El Paso, Texas, during the time of the Redirect Method deployment illustrates the program’s promise. After that tragedy, the campaign saw a 104% increase in impressions related to white supremacist extremism and a 59% increase in clicks. This effect was even more significant in El Paso itself, where a 192% increase in impressions was observed. The researchers measured a 224% increase in watch time for a playlist designed to undermine the white supremacist narrative of “Fighting for white heritage.” This means that at-risk users looking for content based on searches such as “Prepare for race war” consumed alternative program content—with the increased demand for extremist content surpassed by an appetite for content that undermines such messages.

The program also yielded considerable insights into online propaganda more broadly. For example, those at risk of white supremacism often express a clear interest in consuming music by white supremacist bands such as Blue Eyed Devils and Vaginal Jesus, and in looking up influential personalities ranging from a neo-Nazi Canadian YouTuber to Brenton Tarrant—the perpetrator of the Christchurch massacre—and, of course, Adolf Hitler. Islamist-inspired extremists tend to search for terms indicating sympathy or respect for influential personalities such as Omar Abdul Rahman (the so-called “Blind Sheikh”), Osama bin Laden, and Abdullah Azzam (a founding member of al-Qaeda).

IMPLICATIONS

Creating the infrastructure to run county-by-county campaigns aimed at reaching potential violent extremists across the United States presents new opportunities for delivery of highly localized online prevention and intervention programming. In the future, the researchers hope to refer users to local service providers (e.g., mental health professionals) who can sustain engagement with them both online and offline.

Today’s extremists are now recruited at the speed of the Internet, yet resources to prevent extremism are uneven. There is much more to be done domestically to counter both terrorism and violent extremism. The deployment of the Redirect Method shows promising results, lends itself to measuring results, and is inherently scalable. When scaled, it could be the missing link between the online and offline space in the fight against violent extremism across the United States.